CONTENT WARNING: depression, anxiety — please consider not reading beyond this point if these topics tend to trigger you in a bad way

Hi! 👋 I am Jo, I also go by Jojo, and I suffer from depression and anxiety. I find myself going through good periods, not-so-good periods, and… bad periods. Some things make me feel depressed, while other things trigger serious bouts of depression. I haven’t quite figured out what does what in what respect, just that nothing is ever black and white, or logical.By writing about my experiences I am hoping to figure out more about myself and how to navigate my life through depression and anxiety. By sharing some of what I write, I hope to perhaps provide those willing to read with food for thought.On a scale of 1 to 10, I feel about a 6 today.

If some of the following reads familiar, that’s because I wrote this post as an expansion on a LinkedIn post I made earlier today.

WIRED published quite the piece today, revealing how one particular local government’s algorithmic system operates in trying to detect potential welfare fraud. It’s… sinister. And it’s exposed as actively, unfairly targeting and affecting people who are already the most vulnerable in society; people likely to be the least equipped to be able to defend themselves against systems and structures supposed to help them but designed and implemented in ways that victimise them.

WIRED, in this article, laid bare just one local government’s algorithmic system implemented to make life-changing decisions about vulnerable citizens, and how it is designed to discriminate based on age, ethnicity and gender.

To me, this is about way more than (local) governments and welfare payments, this is about – arguably – every AI, algorithm or other automation project everywhere (not just the public sector).

And if you're in a position of making decisions on projects and initiatives like this, I really urge you to read the entire WIRED article and take it to heart – and perhaps, if I may ask, the rest of my humble Substack post right here – so you can make better decisions rather than decisions that serve you better.

As much as I love technological advancements, the way they are being designed and applied fill me with increasing dread. The heading of this post isn’t just a quirky, hyperbolic, headline meant to attract your attention, it truly represents how I feel. I write these pieces mostly for myself, I don’t use SEO language, I don’t pay for promotions, I know my place in society (right where I want to be: powerless, insignificant, unimportant, fairly invisible), and in work terms I’ve only ever held junior/lower-rung/support positions (again, right where I prefer to be), but some (not all) of my reasons for going public with at least some of my writing are (1) to hold myself accountable (2) to perhaps, possibly, reach one person interested in a different perspective not written by someone just like them.

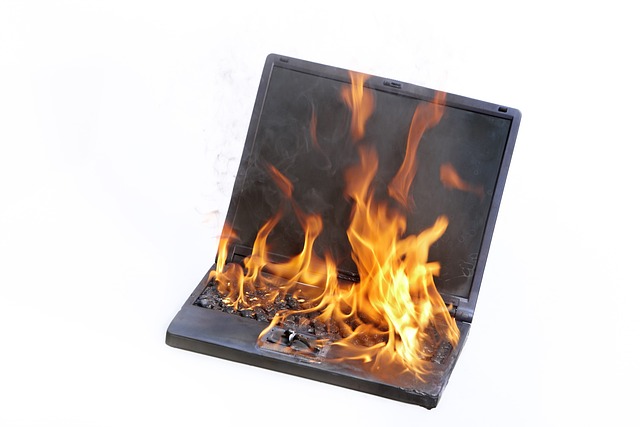

Text continues under image

Image by andreas160578 from Pixabay

Because I can tell you this:

Every IT/automation/algorithmic/AI project or system I've ever been involved with was developed with a purpose to make things better, smoother, easier, faster and so on, and every such project or system failed to reach that purpose for everyone.

There were always victims – real people facing negative effects – and always metric-based excuses wrapped in dehumanised language (“…only a small percentage of cases…”) but there were rarely fast solutions for those real people negatively affected and rarely any rapid structural improvements to prevent future victims, and that was always down to structural unwillingness and inability to listen, empathise, and appreciate that certain issues may be of little harm to one person but have a disastrous effect on others.

Every IT/automation/algorithmic/AI project I've ever been involved with started with documented safeguards put in place in terms of standards, ethics, and risks, but soon devolved into hastier implementation, standards being dropped, ethics going out the window, and so on, as corners were being cut. The official reasons listed in formal communictions would always involve fluffy management speak like "from a cost effectiveness perspective" but unofficially you could bet it was about the vanity of self-interested decisionmakers eager to reach at least some project milestones (most likely owing to other people's hard work) so they could list those as ✨key achievement(s)✨ on their own personal CVs/resumés, in order to make a swift exit from the project and/or organisation before they could be held accountable over their careless oversight.

Best outcome? Everyone got paid, the project got abandoned part-way through, the organisation ended up with yet another additional system, service or platform to manage rather than the replacement this latest one was supposed to have been for all previous part-completed ones.

Worst outcome? Real people were affected... badly. Like, seriously victimised or hurt. Not always to the extremes of the British Post Office scandal or the Dutch childcare benefits scandal, but damaging nonetheless.

Personally I’ve worked in or dealt with too many places where workers did not get paid the first month of their new employment because the automated payroll had been put into place to replace payroll professionals with the ability to run a manual payroll for new joiners, and some of those workers would subequently find themselves penalised by welfare support/benefits systems so automated they weren't equipped to handle the consequences of a (former) claimants' new employers' flawed system, and the blame would be put on the system(s) but that [bleeping] never helped the real people penalised and victimised as a consequence.

I've worked in too many places where people on freelance or zero-hour contracts were made to rely on (wealthy!) corporations' automated systems deliberately designed to not pay them what they were owed as soon as every management layer had ticked their respective boxes on the system to authorise payment (a process often over-long in itself), but only 30, 60, 90 or 120 days after authorisation.

I've worked in too many places where people's money too often temporarily vanished into an automation black hole where they had paid but their money somehow wasn't received; granted, the same automation that made the error would eventually correct it, but this would take too much time by which time people, through no fault of their own, might find themselves victimised by the system by way of having to pay a second time (until one payment eventually came back to them) or face an automated, irreversable consequence or penalty for not being able to pay in time because they did not have the funds to pay twice and were made to wait too long to get their own money back.

I've worked in too many places where skilled professionals lost their jobs as a consequence of decisionmakers' mismanagement. Either way, (former) decisionmakers who should have been held accountable always got out scot-free, because they could always blame successors or predecessors any malfunction or damage, and no resulting damage of GIGO of any system or solution ever affected them personally, or at least never to the possibly devastating extent it did on anyone less fortunate than them. The worst consequence I’ve ever seen a decisionmaker face was the arrangement of a friendly departure with a golden handshake, enabling them to retire in comfort or fail upwards somewhere else. Even the irratic money-handling antics would be translated as personal ✨achievements✨ because now they could claim management of gazillion-dollar budgets as well as for huge cost savings of their cutting of corners and/or premature project abandonment, regardless of how irresponsible and damaging. Numbers don’t lie, but they rarely tell the whole story. It’s just, that, well, is anyone interested in the whole story? Hardly, it seems, and that, to me, is depressing as [bleep].

On a personal note, my experiences led me to seriously wonder to what extent one person's ✨key achievements✨on their CV/resumé can be actual merits, when too often they only served themselves or at best a limited number of people. How detrimental were such achievements to other people? How did they harm another person, a department, an organisation, or even entire clientèles and/or population groups? I appreciate that not everything can be beneficial to everyone, but my issue is that too much of what we do or are expected to do is too detrimental to others.

Technological advances with the potential to help us do better are being used to make people’s lives worse. The WIRED article I reference at the start shows that. The links I share to the British and Dutch scandals show that. The smaller examples I give from personal experience show that.

And I can’t begin to describe how much I struggle with this. Whatever metric or spin anyone tries to stick on this, this isn’t innovation. This isn’t progress. This isn’t growth. In fact, I’d say it’s quite the opposite. And I’m not quite sure how to go about navigating it, because it’s all too ugly to me.

Modern-day work life is not the cause of the depression and anxiety that have blighted my life for decades now, but they do at times drive my mental health in directions that I am desperate to veer away from. This is why I intend to write more pieces like this, if only to figure out more about myself and how to navigate my life. THIS IS NOT AN INVITATION FOR ONLINE TAKES OR UNSOLICITED ADVICE. No matter how much I (over)share, you do not know me. By the same token, if anything I write appears recognisable or relatable, I hope you don’t hate that, but also please don’t take anything I write about my mental health as guidance or knowledge about your own – I am NOT a mental health professional.

Subscribing to this Substack is free (and I intend to keep it that way for the forseeable future), but means you receive an email any time I post something new on here. If you would like to give me a one-off, no-strings-attached donation, feel free to buy me a coffee.

Reasons modern-day work life depresses me (2)

Part 2 of... I don't know yet how many